When Written: Aug 2011

I was intrigued when an announcement came from Adobe for a new web design tool called ‘Muse’. Currently this is on free trial but will be a subscription based product like Office 365 and will cost $15 a month.

Adobe’s Muse shows the way to go with HTML design tools

My first impressions with this product were very favourable, with drag and drop positioning of all type of elements as well as event driven interaction being achieved with a few clicks. The output from this tool is pretty clean HTML with the ubiquitous batch of included libraries which although these are large in size will be cached on the client’s browser so should not affect performance too much after the first download. Or does it?

This issue was raised during a presentation I attended at my local ‘chapter’ of the NXTGEN user group. The talk was about building high performance web sites and what you can do to minimise the chances of it going down should it become a ‘victim’ of its own fame via Slashdot or a Tweet from someone like Stephen Fry, the effect on most web servers of such a mention having resulted in what has become known as the server being ‘Fr(y)ied’. The problem with such events is the enormous amount of new traffic that is generated and as almost every visitor is a new visitor nothing from your site will be previously cached on their browser so a single page view can result in several HTTP requests.

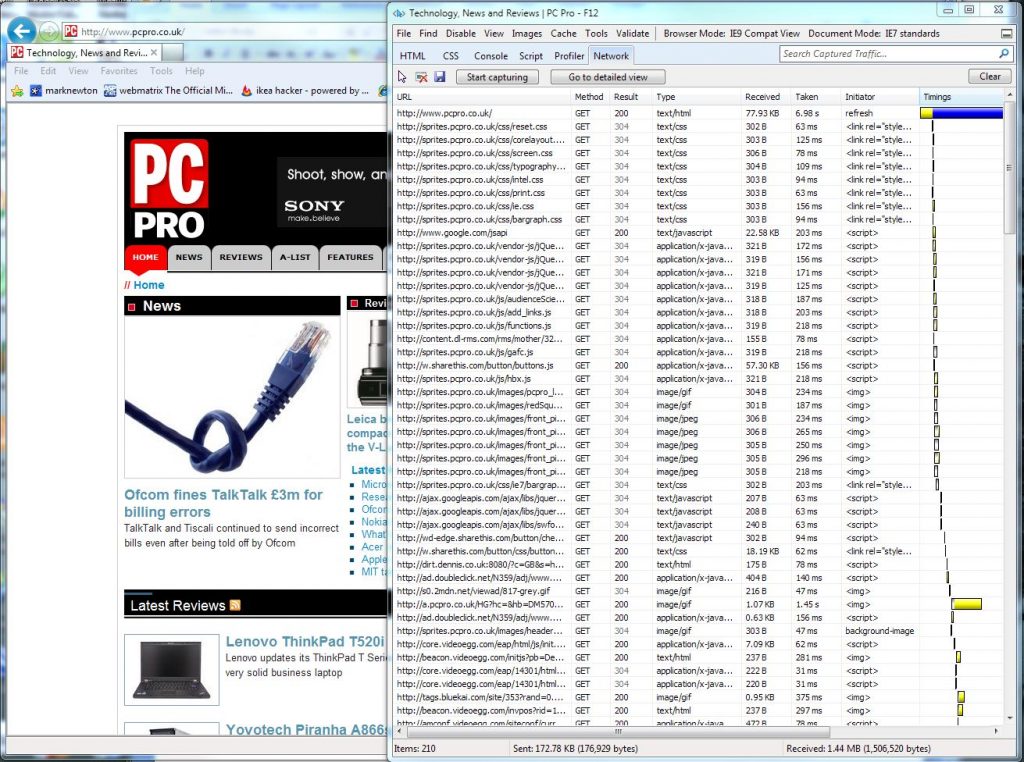

To demonstrate this I decided to take a brief look at the PcPro home page and this shows how a single page request results in over two hundred HTTP requests to various servers. Now in this case not all the servers are run by Dennis Publishing as they are advertisements served by the various providers, but bearing in mind that a typical single IIS server would struggle to serve more than 400 HTTP requests per second, with each new visitor creating 200 plus requests then it is easy to see how an influx of several thousand visitors can bring a previously well performing website down.

One page request can results in several hundred HTTP requests

Now sites like the PcPro do not run on a single server and the web site itself has been optimised to cope with large amounts of traffic, but if your site does not have such an infrastructure, think twice before you ask the likes of Mr Fry to tweet about you.

The situation can be compounded if your site generates its pages dynamically, which most modern sites do; almost all content management sites dynamically generate the pages and so the number of pages that can be served a second is more limited than if the web site was built using plain static HTML pages. One way around this performance hit is to enable page caching on the server, by doing this a tenfold improvement in pages per second served can easily be achieved at no cost.

The only drawback is that if the particular page content is changing rapidly then the users may not see the latest information for a little while, but for most sites this is not an issue, only sites like a sports results site need to be careful of using this technique. The other most obvious way to increase your web server’s capability is to increase the number of web servers and to build a server farm of them.

There are two problems with this approach; the first is that the load balancing hardware that you need to distribute the HTTP requests between the farm of servers can be very expensive if you buy dedicated hardware. But there is a cheaper solution to this hardware, and that is to run a Linux box with some software called haProxy on it. This software is free and will turn this box into a very customisable load-balancer with failover to a second box should you so wish.

The other problem with most load balancing solutions is that because requests are distributed across the servers on a ‘round robin’ basis,if your application uses sessions to track variables then these will not work across the farm as each page request will be served by a different server. You will need to architect your solution to pass such variables via the URL string, this has the useful spin off that your application does not need to place a cookie on the user’s browser and so you don’t need to ask permission of the user to do this as the new law I wrote about previously, now dictates.

Article by: Mark Newton

Published in: Mark Newton